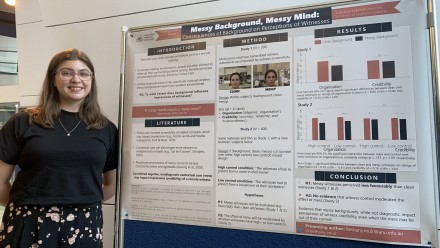

Improving human perception of low resolution face images

Low-resolution face images occur in several settings that require a human observer to identify the face or its expression. For example, a crime eyewitness may have seen the perpetrator's face only blurred by distance. This project aims to improve low-resolution face perception. Previous approaches have attempted only to improve general image visibility. Here, the novel theoretical idea is to also alter the face's structure, tapping knowledge about higher-level face coding. Manipulations are caricaturing and whole-then-part alternation, as derived from face-space and holistic processing theories, respectively. Effects of viewpoint and own-versus other race faces will test practical generalisability of the new methods, and refine theory.

There are many settings in which people need to recognise faces from poor quality images. This includes when authorities ask the public to help identify crime suspects in surveillance images, and when immigration officials match CCTV images to watched-for targets. Using knowledge of how the human visual system perceives faces, this project develops methods of altering the face image to improve recognition of faces in these naturalistic contexts.

Project Aims and Background

Following the 2013 Boston marathon bombing, the FBI released surveillance CCTV images of the two suspects' faces [1], and requested the public's help to identify the men. Unfortunately, despite widespread viewing of these images within the Boston community, no-one came forward to identify the men, and they were located only via other means and after they had killed a policeman. Following their capture, high-school classmates and teachers easily recognised high-resolution photographs of the suspects [2]. Crucially, however, these people had apparently failed to recognise them earlier from the FBI-released CCTV images.

The above is a situation where human perception of low-resolution faces is required. Other settings include eyewitness testimony where the crime perpetrator was seen in the distance, or only with peripheral vision [3]; a human operator monitoring video from an unmanned flying drone (eg. travelling into a bushfire zone) for which, even if a high-resolution camera is installed, long viewing distances or limits on download speed can reduce the spatial and temporal resolution at which faces can be viewed in real time [4]; and how faces might appear to people fitted with visual prosthetics (bionic eyes), which we simulate in normal-vision observers [5]. Across these various contexts, the specific type of face image varies: all are low-resolution, but some are pixelated (CCTV), some are

'phosphenised' (prosthetic simulation), and some are blurred (eyewitness testimony of a distant perpetrator) [Fig1].

This project provides the first integrated study aimed at developing manipulations of the face image that can enhance normal human ability to recognise a person's identity, and their expression, from low-resolution facial images.

Previous studies show partial success from methods that improve 'front end' processing applicable to

all visual recognition, such as increasing contrast of high spatial frequencies in the image to improve visibility of fine detail [6,7]. But, these approaches do not attempt to tap into knowledge about how faces are coded by the visual system.

The present study is inspired by a novel theoretical approach, namely that further improvement should be possible by targeting known properties of mid- and/or high-level face processing. Further, this approach provides a focus on improvements with widest applicability to different precise forms of low-resolution images.

The novel approach is to manipulate the shape information within the face. Shape changes target many mid- and high-level visual processing areas that show strong fMRI response to faces. Our two shape manipulations – caricaturing and whole-then-part-alternation – derive from established empirical findings and theoretical understandings from the extensive literature on perception and cognition of normal-resolution faces.

Specific AIMS

- Caricaturing. Test whether participants' recognition of a low-resolution face's identity or expression is improved by caricaturing the face away from an average face. This procedure exaggerates the identity or expression information specific to that face (somewhat like cartoonists' caricatures, but using morphing procedures on photographs).

- Whole-then-part alternation. Test whether recognition can be improved using a procedure that alternates an expanded-up, and thus higher-resolution, view of a local face region (eg. the eyes, or the mouth) with an original size, and thus lower-resolution, view of the whole face.

- Generalisability. Test the generalisability of improvements arising from these manipulations to cases of practical and theoretical relevance, namely: different forms of low-resolution images (pixelised, blurred, phosphenised); various practical face tasks including perception (eg. naming a facial expression), matching (eg. as occurs when police need to judge whether a low-resolution CCTV image is a genuine match to any of a set of candidate faces retrieved by computer algorithm from an offender database), and memory (eg. as relevant to eyewitness testimony, where the image manipulation can be applied during a police recognition test); and different face types (familiar, unfamiliar; own-race, other-race; front view, profile; upright, inverted).

- Use the results to develop an improved theoretical model of which stages of the visual system contribute to caricature advantages, and to whole-then-part advantages, for low resolution faces. Currently, the precise origins within mid/high-level vision of caricature and whole-part advantages are not well understood, even for high resolution faces. Our results will narrow down possibilities by linking results from our generalisation studies to known and emerging properties of specific brain regions (e.g. some areas are sensitive to race or viewpoint, others are not). This enhanced theoretical understanding will in turn have implications for our final aim:

- Test whether combining the different methods (e.g. caricaturing plus whole-then-part; caricaturing plus an early-vision approach increasing visibility of fine detail) will further help recognition compared to a single method alone. This uses the logic that benefits should add together where manipulations tap independent stages of the visual system.